The rise in Artificial Intelligence has raised the need for everyone to use extra vigilance when reviewing any information. In an effort to reduce the job of manually checking this work, tools that purport to check for the existence of AI-generated content have been developed. How good are these tools? Can educators, editors, and peer reviewers rely on these tools to quickly eliminate obvious fraudulent authorship?

The Start of the Problem

One would think that an obvious indicator of possible AI-generated content is an impersonal writing style. However, that may not be entirely true.

The following article, though not AI-generated, would lead one to wonder about its origin:

Change management (File Integrity monitoring or FIM) and vulnerability management software are integral components of an organization’s risk management strategy within the domain of cybersecurity and IT management. Containers, exemplified by platforms like Docker and Kubernetes, have surged in popularity due to their efficiency and portability. They encapsulate applications and their dependencies into self-contained units, streamlining deployment and management across diverse environments.

This writing style is not a reason to immediately suspect AI-generated content. However, the results are interesting if one uses the popular tools that are supposed to detect if a piece is AI-generated.

To assess the validity of these AI authenticators, I decided to test out three of the most popular AI verification tools: ZeroGPT, ContentDetectorAI, and Originality.ai (a subscription-based service).

The piece shown above was run through the AI detectors with the following results:

ZeroGPT and ContentDetector both reported that the entire article was human-generated.

However, Originality.ai indicated that the piece was 100% AI-generated. Since the results are so inconsistent, it signaled the need to perform some independent tests.

The First Test

In the first test, I composed a short description of my recent vacation in a remote town in Italy. Then I prompted ChatGPT to write a story about a person who goes to a remote village in Italy. Upon running these stories through the scanning tools, the results were interesting, to say the least:

|

Tool |

My Italian Story |

ChatGPT’s Italian Story |

|

ZeroGPT |

34.64% |

43.82% |

|

ContentDetector |

76.92% |

67.50% |

|

Originality.ai |

70% |

100% |

* percentages indicate copy that resembles AI-generated patterns

Even though Originality.ai was entirely correct in detecting the AI-generated story, I could not help but seriously reconsider my writing career, based on the results garnered by my original story submission. Since I do not fancy myself an expert writer, I decided to look to a couple of “more established” authors to see what these tools detected.

The Second Test – AI vs. Edgar Allan Poe

For my second test I used Edgar Allan Poe’s “The Tell-Tale Heart” and then I asked ChatGPT to write a story similar to Poe’s.

For the sake of the story, the AI version of Poe’s horror story was complete with illogical references to a heart and floorboards in a garden! These were obvious oversights as AI attempted to substitute words from Poe’s original work. I was warmed by the knowledge that at least it knew of the original story.

However, since this is not a piece about the shortcomings of AI, let’s move on to the AI detector results.

|

Tool |

Edgar Allan Poe |

AI-generated Story |

|

ZeroGPT |

0.00% |

10.46% |

|

ContentDetector |

22.51% |

62.90% |

|

Originality.ai |

0.00% |

100% |

* again, percentages indicate copy that resembles AI-generated patterns

How could the AI detector, which clearly knows the classic Poe tale, suspect that 22.51% was AI-generated?

Third Test – Did the Beatles use AI?

For the third test, I asked AI to write a song in the style of The Beatles’ “Eleanor Rigby.”

Strangely enough, ContentDetector reported that the lyrics of the original song written by John Lennon and Paul McCartney were 25% generated by AI. This would answer the age-old debate of who is “The Fifth Beatle”? It was clearly AI.

|

Tool |

Lennon/McCartney |

AI-generated Song |

|

ZeroGPT |

21.78% |

0.00% |

|

ContentDetector |

25.00% |

42.11% |

|

Originality.ai |

0.00% |

100% |

* again, percentages indicate copy that resembles AI-generated patterns

Originality.ai Seems to be 100% Accurate

At first glance, it would appear that Originality.ai is highly accurate in its ability to detect human content from AI-generated content. However, this is nothing short of illusory, as it may have been trained on all the classics works of poetry and literature, as evidenced by its inability to recognize newer material. (Originality.ai rated a recently published article of mine, indicating that I am only 50% in my transition along the robot migration journey. Also, recall that it rated my Italy vacation story in Test 1 as 70% AI-generated.) A couple of thoughts come to mind: Either Originality.ai is not trained on newer material, or it does not understand technical text. Either way, Originality.ai is equally as unreliable as the other AI detection tools.

What Can We Learn From This?

The most obvious lesson is that the AI detection tools, in their current state, are both extremely inaccurate, as evidenced by the results of all three tests. It is also clear that the results are occasionally contradictory, as witnessed with the odd “mostly AI-generated at 0%” result reported by ZeroGPT in Test 3. The accuracy ratings of Originality.ai should also be considered, as noted from its results as shown above.

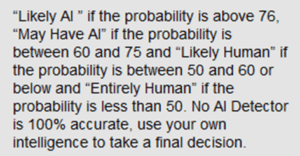

Similarly, the scoring methods between each of the tools are unique to the tool itself. ZeroGPT uses a low percentage threshold to deem something as artificially generated. ContentDetector is far more liberal in its scoring, indicating that a score of 67% is only indicative that the text may have AI. According to the ContentDetector tool:

You are invited also to note that according to ContentDetecrtor “No AI Detector is 100% accurate, use your own intelligence to take a final decision.” Bruce Schneier concisely corroborates these findings, stating that AI detection tools are unreliable.

What Do We Do with This Information?

There is no way to definitively know if an author is using AI or simply writes in a very clinical style. However, the knowledge that AI often “hallucinates” is well-documented. Can we assume that fictional information, hyperlinks, or references are clear indicators of AI-generated content? At this point, we must continue to rely on our human insight, knowledge, and research and leave the detection tools to a later time when they may become more accurate.

If you enjoyed this article discussing whether we can rely on AI detectors, you can visit our website for more information on Generative AI and B2B Cybersecurity Content Marketing and to read our AI Policy.